1581

Interactive hand gestures for HoloLens rendering control of real-time MR images1Biomedical Engineering, Case Western Reserve University, Cleveland, OH, United States, 2Interactive Commons, Case Western Reserve University, Cleveland, OH, United States, 3Department of Radiology, School of Medicine, Case Western Reserve University, Cleveland, OH, United States

Synopsis

User interactions via hand gestures are added to a real-time data acquisition, image reconstruction, and mixed-reality display system to allow a user to interact more flexibly with the rendering. Images at precalibrated slice locations are acquired and displayed in real-time to the user, who is able to toggle between viewing some or many slices as well rotate, resize, and dynamically adjust the window and level of the rendering.

Introduction

It has previously been shown that multi-slice planar and volumetric MR images can be acquired, reconstructed, and displayed as 3D holograms in real-time[1,2,3]. In that setup, the user explored a dataset fixed in space by walking or leaning around it. However, these kinds of large-scale user movements may be impractical in an interventional setting. Here we propose a set of user interactions that allows the user to rotate and resize a rendering using intuitive hand gestures. In order to increase the flexibility of the rendering, we also describe dynamic window and level adjustment and runtime “target slice” selection from a set of pre-initialized positions.Methods

MR data for multi-slice 2D images in the heart were acquired using an undersampled (12/48 arms) spiral trajectory and reconstructed using through-time spiral GRAPPA in the Gadgetron framework

[4]. Using a pre-calibration scheme [3], GRAPPA weights were calculated for up to 12 slice positions at the beginning of the imaging session, allowing for subsequent accelerated imaging at any subset of these locations.

Following acquisition, reconstructed images were transferred to a separate computer for rendering. Rendering was performed in Unity and output to a Microsoft HoloLens headset via Holographic Remoting. All slices are rendered at their acquired positions and orientations relative to a common coordinate frame. Interactions with the visualization are accomplished using the user’s gaze combined with the hand gestures of air tap, double air tap, and tap/drag motions, in accordance with the Microsoft HoloLens’ interaction paradigm.

Interactions were divided into two modes controlled by a floating menu oriented to always face the user. In “rendering adjustment mode,” the user is able to rotate and resize the rendering, as well as adjust the window and level. The “tag and drag” gesture begins a rotation of the common slice coordinate frame relative to the rendering’s center of mass. The y-axis of the rotation coordinate system is defined as constantly aligned to the current vertical orientation of the user’s head, while the z-axis is defined by the projection of the ray between user’s head position and the rendering’s center of mass on the system defined x-z plane. These dynamic axes allow the motions of the rendering to correspond naturally with the user’s inputs.

The rotation, rescaling, and window/level adjustment were controlled by mapping the offset of the user’s hand within a 1-meter unit cube centered at the position of the user’s initial tap gesture to the range of the possible output (-180 to 180 degree rotation; -2x to +2x scaling; rate of change of the window or level).

In the “multi-slice” interaction mode, the user is able to toggle between viewing all available pre-calibrated slices, and the subset of slices that are currently being imaged. The user may also gaze-and-tap on individual slices to view a label identifying the slice number that can be input to the scanner’s user interface, allowing for user selection of the target slices to image. The slice tap gesture can also be used to send a command to turn on/off the acquisition of individual accelerated slices, allowing the user to change slices from the hologram.

A custom communication protocol was established to specify the information to be transmitted in each networking packet between the reconstruction computer and the rendering computer, as well as to manage the scheduling of the packets.

Results

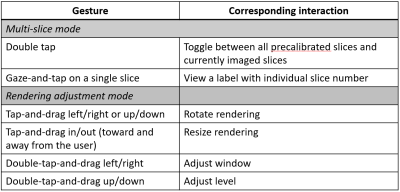

Figure 1 shows a table of the available gestures and their corresponding uses. Figure 2 shows a video from the perspective of a user wearing a headset who is switching between viewing 12 precalibrated slices and 3 accelerated slices. Figure 3 shows a video of the user rotating a rendering, and figure 4 shows the user resizing it. Figure 5 shows an example of window and level adjustment. These videos were collected while scanning a healthy volunteer and show the real-time view of a user operating the scanner.Discussion

User interactions were added to a real-time data acquisition, reconstruction, and mixed-reality rendering system. A user is able to view a large collection or a subset of imaging slices in an organized and intuitive manner. Providing the ability to rotate, resize, and adjust the window and level of the rendering begins to bridge the gap between the expectations of a reading room environment and the realities of a real-time imaging system.

Hand gestures were selected over other forms of interaction, such as voice control, to provide an intuitive and non-disruptive way to interact with a three-dimensional object. Although this system is designed to operate in real-time at the scanner, the rendering adjustment features are available offline to improve retrospective visualization of image sets. Future work includes sequence programming for real-time control of scan parameters.

Acknowledgements

Siemens Healthcare, R01EB018108, NSF 1563805, R01DK098503, and R01HL094557.References

[1] Franson D, Dupuis A, Gulani V, Griswold M, Seiberlich N. Real-time acquisition, reconstruction, and mixed-reality display system for 2D and 3D cardiac MRI. In: Proceedings of the 26th Annual Meeting of the International Society for Magnetic Resonance in Medicine. Paris; 2018. p. 598.

[2] Dupuis A, Franson D, Jiang Y, Mlakar J, Eastman H, Gulani V, Seiberlich N, Griswold M. Collaborative volumetric magnetic resonance image rendering on consumer-grade devices. In: Proceedings of the 26th Annual Meeting of the International Society for Magnetic Resonance in Medicine. Paris; 2018. p. 3417.

[3] Franson D, Dupuis A, Griswold M, Seiberlich N. Real-time imaging with HoloLens visualization and interactive slice selection for interventional guidance. In: Proceedings of the 12th Interventional MRI Symposium. Boston; 2018.

[4] Hansen MS, Sørensen TS. Gadgetron: An open source framework for medical image reconstruction. Magn. Reson. Med. 2013;69:1768–1776. doi: 10.1002/mrm.24389.

Figures